Libot

Autonomous Mobile Manipulation Robot for Libraries

I would like to express my gratitude to Professor Qingsong Xu for his invaluable guidance. Special thanks to my partner, Mr. Zhang Tong, for his dedication and countless hours spent discussing and debugging code with me in the FST AI room.

Libot is a mobile robot designed to automate tasks in libraries.

Visit my gitrepo for codewise ideas: 24EME_FYP

Key Features:

- Book Detection System: Utilizes the YOLO v8 deep learning model to recognize book spine labels from RGB images, facilitating efficient book cataloging.

- Deep-learning-based Object grasping: Integrated with the MoveIt platform through move group API to optimize book handling through precise pick-and-place operations. Deep-learning algorithm GPD by Andreas is used for grasp generation.

- SLAM Mapping and Navigation: Enhanced with Simultaneous Localization and Mapping (SLAM) to adapt to dynamic library layouts, improving navigation and operational efficiency. Mapping through hectoring mapping by TeamHector at TU Darmstadt.

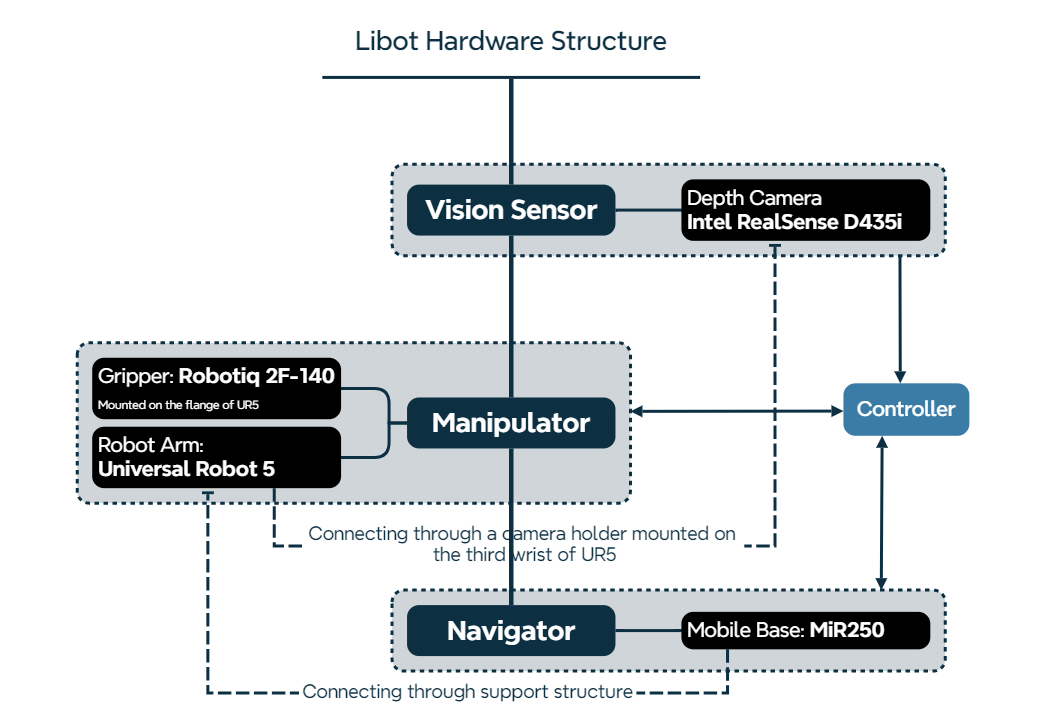

Hardware

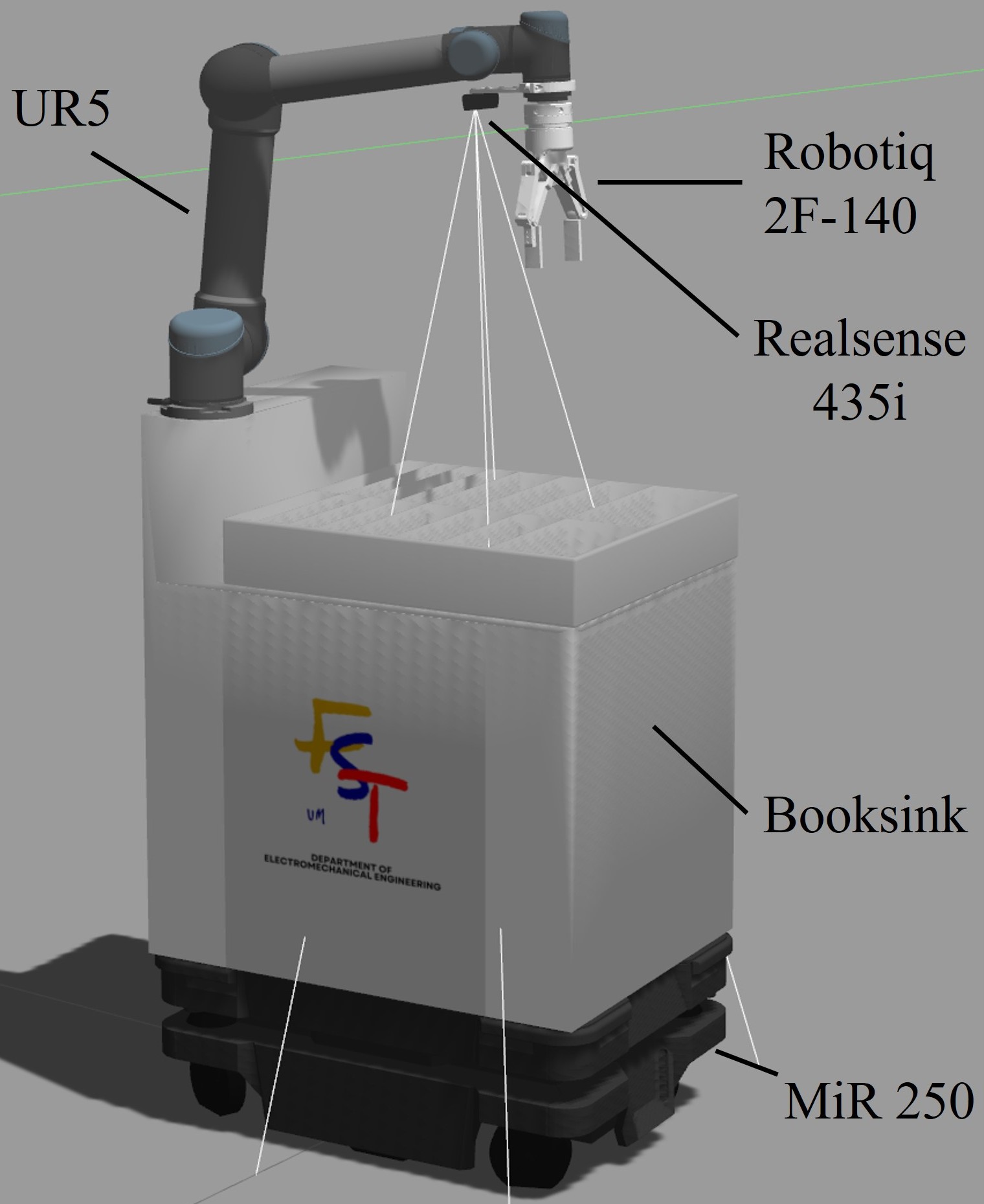

Libot’s hardware consists of three main modules: vision sensor, manipulator, and navigator. These modules are connected to a central control computer.

- Navigator Module: Utilizes the MiR250 mobile robot, providing mobility and a customized book sink. The book sink serves as both a storage unit for books and a mounting platform for the manipulator.

- Manipulator Module: Features a UR5 robotic arm mounted on the book sink. Attached to the UR5 are:

- Robotiq 2F-140 Gripper: Enables Libot to grasp and manipulate objects.

- Intel RealSense D435i Depth Camera: Part of the vision sensor module, mounted on the UR5’s third wrist through a dedicated holder, providing real-time depth perception.

Control Methodology & Simulation Environment

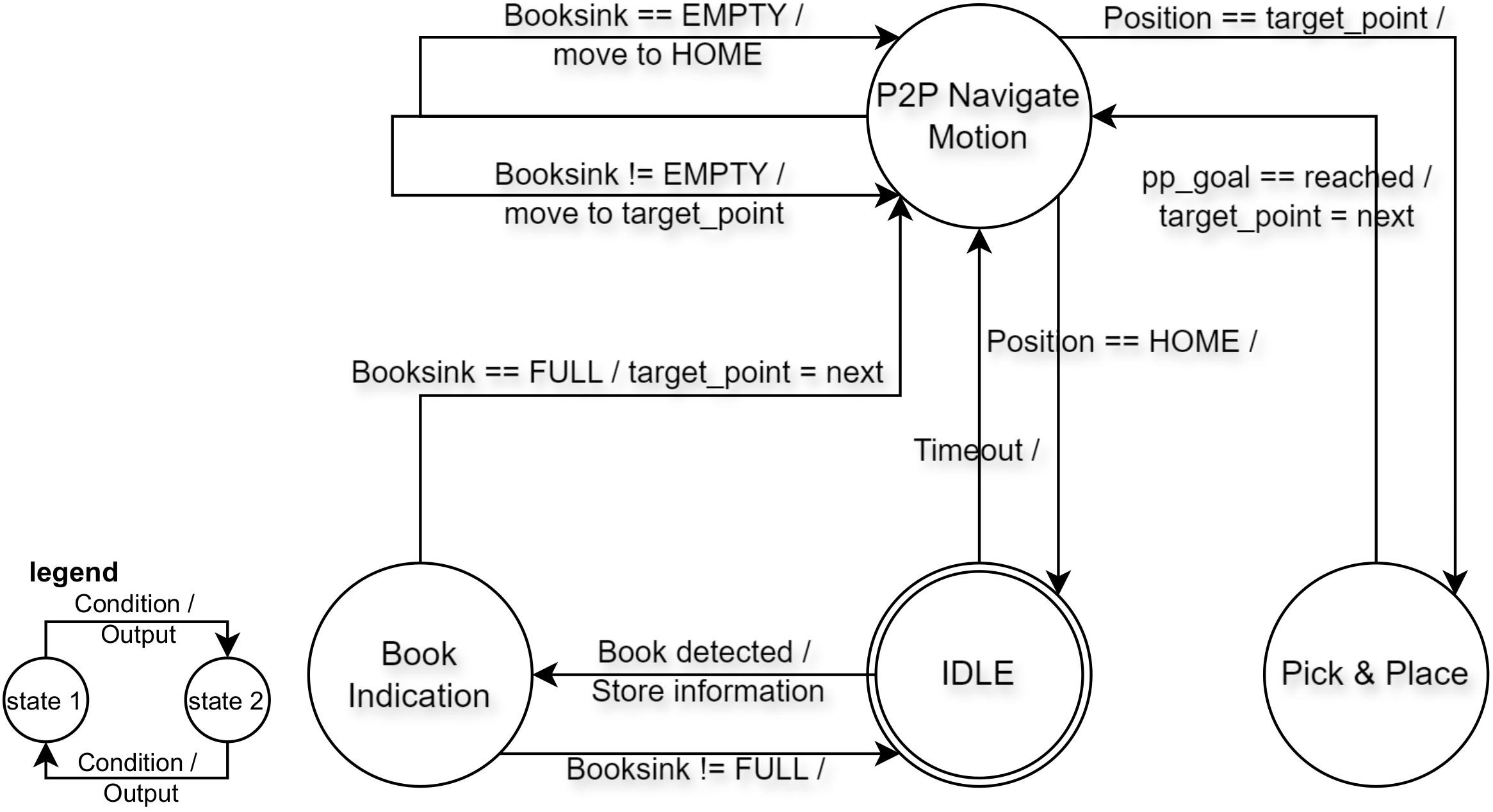

Above shows the conceptual program design of Libot. There are four states

-

-

IDLEmeans Libot not being used or active. No task is execute during Libot’s IDLE state

-

-

- Detection of book placed in booksink can trigger the transition from

IDLEstate toBook_Indicationstate. This transition will output tag information recognized by our vision model 3.16 of the newly placed book and stored in a shared memory. Ultimately, the tag info will be compared with a library database then return the location information of the book,Indication taskcompletes by this point.

- Detection of book placed in booksink can trigger the transition from

-

- This state execute the task of the navigation to a given point. It has three entries corresponding to three other states. From

Book_Indicationto this state requires the condition of the booksink is full, the first location point which obtained from indication task store in a queue (shared memory) will be assign to a variable nametarget_pointwhich is the point will be given to navigation task. Direct transition fromIDLEto this state is possible, this design is to make Libot work at a certain frequency. For example, thetimeoutvalue can set to be 4 hours, with this entry to navigation, Libot will be in return book operation every 4 hours even the booksink is not full. Onceit entersP2P_NavigationfromIDLE, a re-entry will be trigger with the condition of the booksink is not full, it will assigntarget_pointin the way mentioned earlier.

- This state execute the task of the navigation to a given point. It has three entries corresponding to three other states. From

-

- After the complement of the navigation task, Libot will be in this state to perform

Pick and Place task, upon the completion of this task, it will returns toP2P_Navigationstate. Please scroll down to see detailed description of Pick and Place task.

- After the complement of the navigation task, Libot will be in this state to perform

Once the Libot return all the books after the transition between P2P_Navigation and Pick_Place state, the emptiness of booksink will trigger the re-entry of assigning the target_point to HOME position. Libot will move to it’s HOME position first and turn to IDLE again.

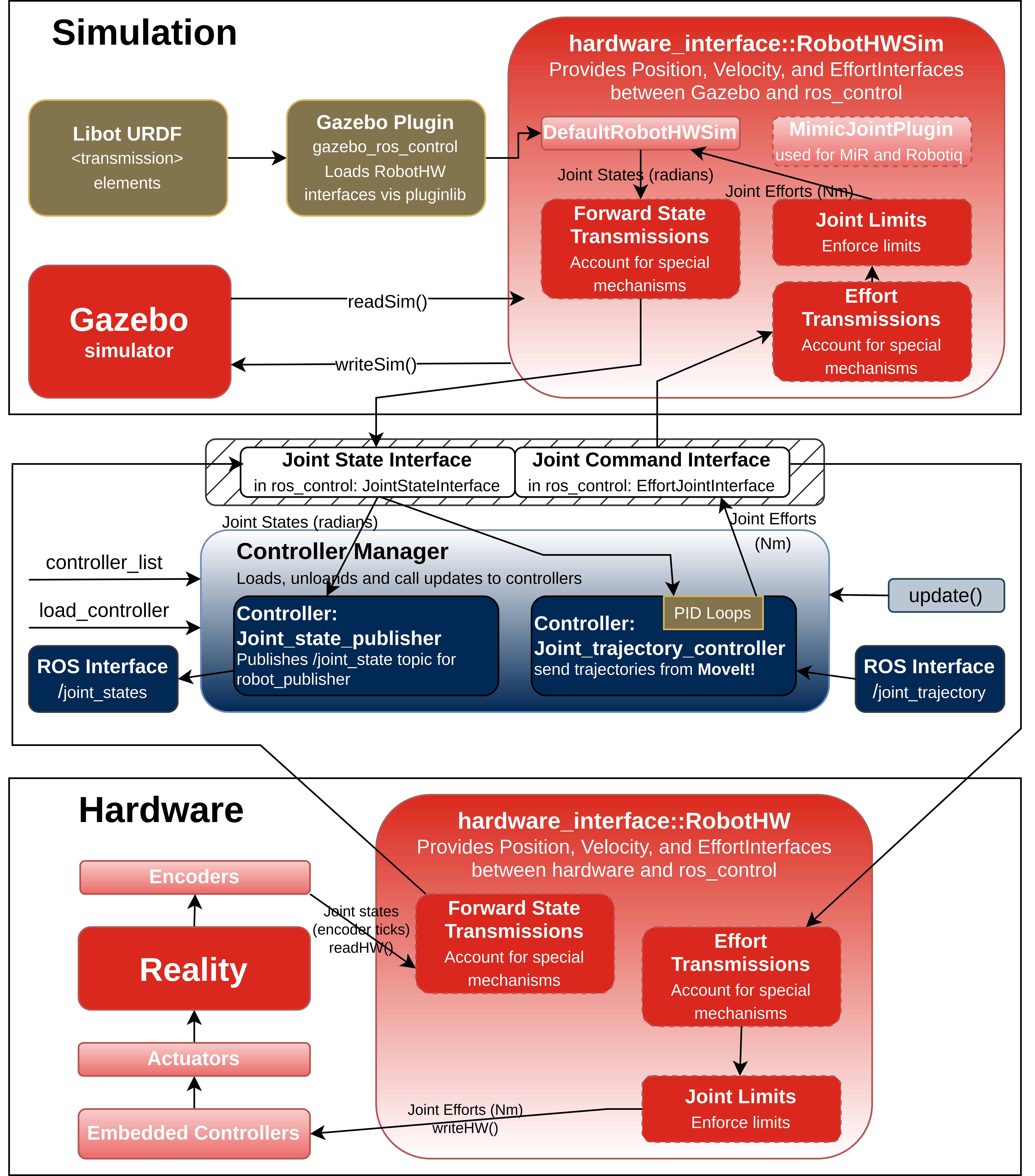

Collaborating Gazebo and ROS control

Libot was developed and tested in the simulation platform Gazebo. Physical parameters like mass, inertia, were defined and fine-tuned through our Universal Robotic Description Format (URDF) files for Libot, and pass on to Gazebo to mimic the real-world situation. Gazebo can read and write the hardware_interface::RobotHWSim provided by ros_control package which enables the reflection of control by ROS in Gazebo simulation.

Mapping & Point2Point Navigation

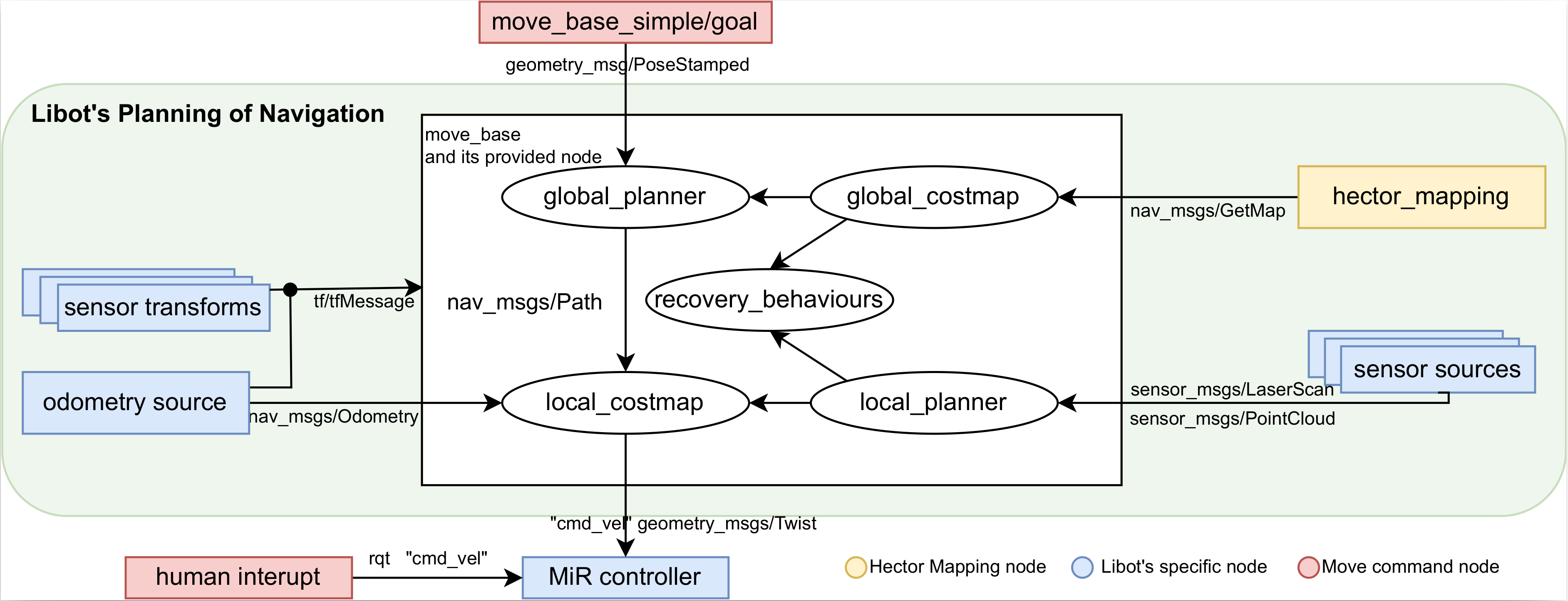

MiR is a commercial product with its own mapping and navigation technology. However, Libot uses the open-source system ROS and its packages for navigation. The key component is the move_base node from the ros_navigation package, enhanced by hector mapping for SLAM. This approach utilizes a LiDAR and depth cameras on Libot’s MiR base.

-

Sensory input:-

Odometry Source: Provides data about Libot’s movement over time, such as distance and speed, based on wheel encoders or other motion sensors.

-

Sensor Sources: Inputs from the robot’s perception hardware, like LIDAR or depth cameras, providing

sensor_msgs/LaserScanandsensor_msgs/PointClouddata.

-

-

Sensor Transforms- The data from various sensors are transformed using

tf/tfMessage, which maintains the relationship between coordinate frames. This step aligns all sensory data to a common reference frame, helping Libot understand its environment relative to its position and orientation.

- The data from various sensors are transformed using

-

Costmap generation- Both the

global_costmapandlocal_costmapare updated with the transformed sensor data. Theglobal_costmapreflects the overall environment based on a pre-existing map, while thelocal_costmapis dynamic, reflecting immediate obstacles and changes around Libot.

- Both the

-

Global planner- Using the

global_costmap, the global_planner computes an initial path to the destination. This path is laid out as a series of waypoints and passed down asnav_msgs/Pathto thelocal_planner.

Local planner- The

local_plannerrefines the path provided by theglobal_planner, considering real-time data from thelocal_costmap. It generates a short-term path for Libot to follow safely, adjusting for unexpected obstacles and ensuring the robot’s movements are kinematically feasible.

- Using the

-

Execution of movement- Movement commands, encapsulated in

cmd_velmessages of typegeometry_msgs/Twist, are sent from thelocal_plannerto the MiR controller. This component controls Libot’s actuators, translating the velocity commands into physical motion.

- Movement commands, encapsulated in

-

Hector Mapping- In parallel, the

hector_mappingnode performs SLAM to update the map of the environment and locate Libot within it. This information updates theglobal_costmapand aids in continuous navigation.

- In parallel, the

-

Recovery behaviour- If Libot encounters a problem, like an impassable obstacle or a localization error, the

recovery_behaviorsare triggered. These behaviors aim to resolve the issue by re-planning a path or clearing the costmaps to restart the mapping process.

- If Libot encounters a problem, like an impassable obstacle or a localization error, the

-

Human interaction- Throughout this process, a human operator can intervene by providing new commands or interrupting the current operation.

Computer Vision Book Detection

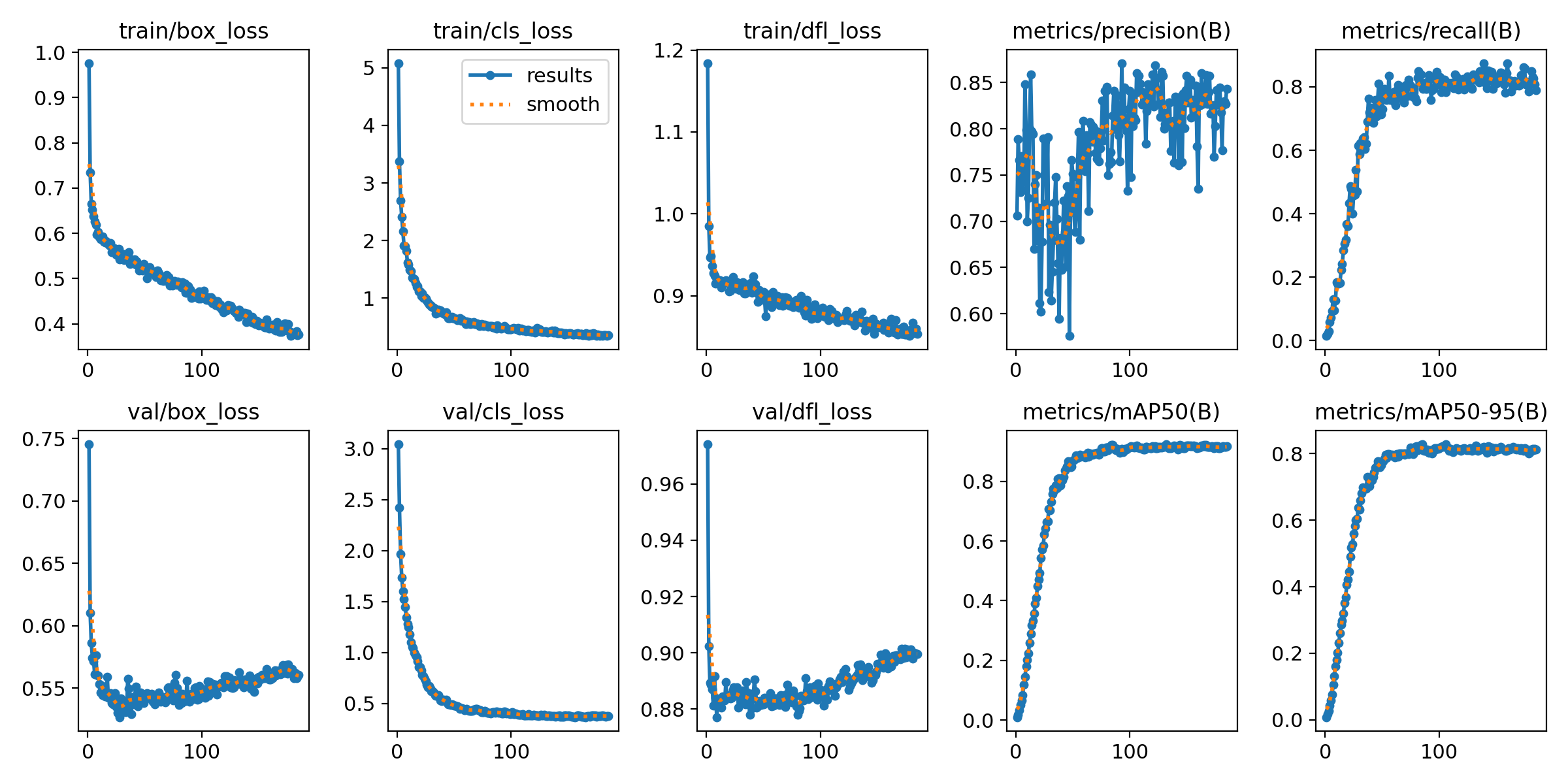

Dectection results trained by YOLOv8l model are illustrated in Table 4.2. The model showcases its capability to effectively detect objects with various degrees of overlapping bounding boxes, as evidenced by an overall class mAP50 of 92.3% and a noteworthy mAP50-95 of 82.8% among all string classes.

Many Thanks to my partner Zhang Tong who resolve all ML dependencies and trained the model.

Grasp Pose Detection (GPD)

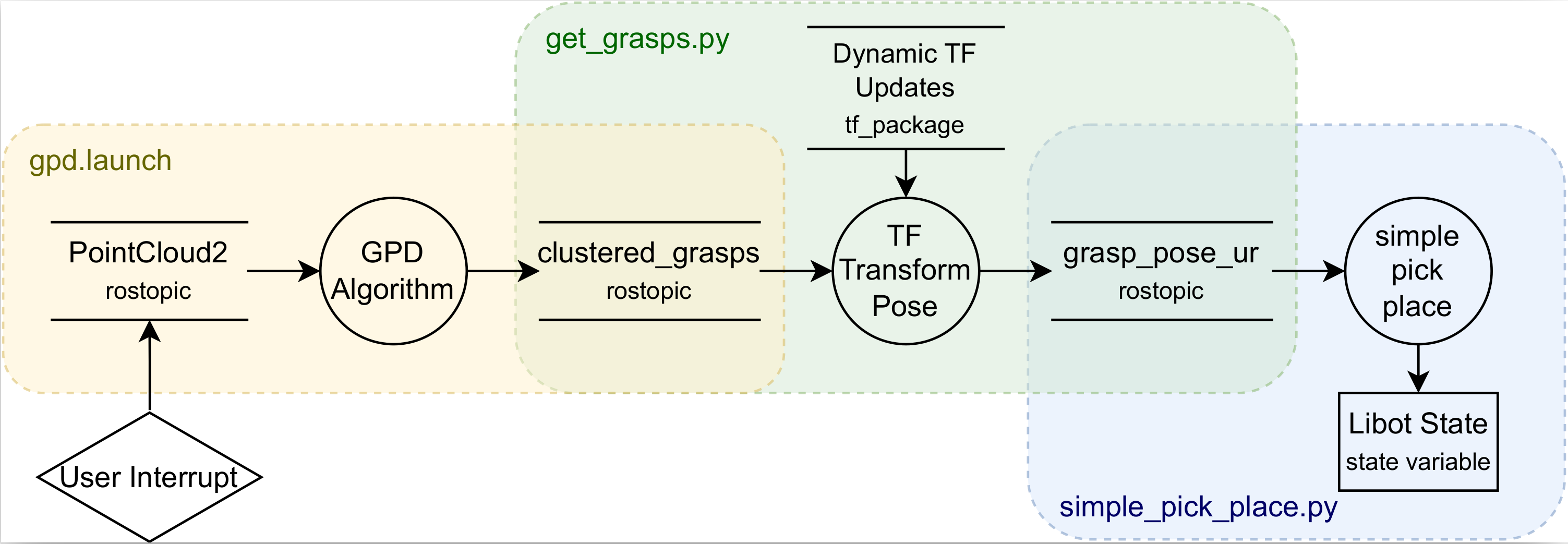

Within sequence showing above, there are three core subprocesses outlined:

-

-

GPD Algorithm:This module processes thePointCloud2data, received as a ROS topic, to compute potential grasps. These grasps are identified and then published as aclustered_graspstopic, which aggregates the grasping points identified by the GPD algorithm.

-

-

-

TF Pose Transform:Subsequent to grasp identification, theclustered_graspsmust undergo a transformation to align with the arm of Libot’s operational frame of reference. This step involves converting the grasps from thecamera_depth_optical_frameto thebooksink_link, which serves as the reference frame for the MoveIt motion planning framework. This transformation is pivotal as it ensures the grasps are contextualized within the robot’s spatial understanding and MoveIt’s planning pipeline.

-

-

-

Simple Pick Place Task:The final module, depicted as a rounded rectangle, utilizes the transformed grasp data to inform the motion planning for the UR5 robotic arm. Thissimple_pick_place.pyscript orchestrates the physical actions required for the robotic arm to move to the desired positions and execute the pick and place task, finally returns a new state toLibot Statein a rectangle which represents the shared memory that can be pass to and edit in other state.

-

Manipulation planning with MoveIt!

Learn more about MoveIt: MoveIt motion planning network

Work done by this project through MoveIt:

- Two move groups set up using moveit setup assistant, generated a ROS package powering Libot’s manipulation task MoveIt planning pipeline.

- Integration with Deep Learning based Grasp Pose Detection

Libot’s control through MoveIt can be done in several ways:

- MoveIt Commander: This includes the MoveIt Python API and the MoveIt Command Line Tool for programmatic control of Libot’s movements.

- ROS Visualizer (RViz): An interactive tool that lets users visually plan and execute trajectories within a simulated environment.